Overview

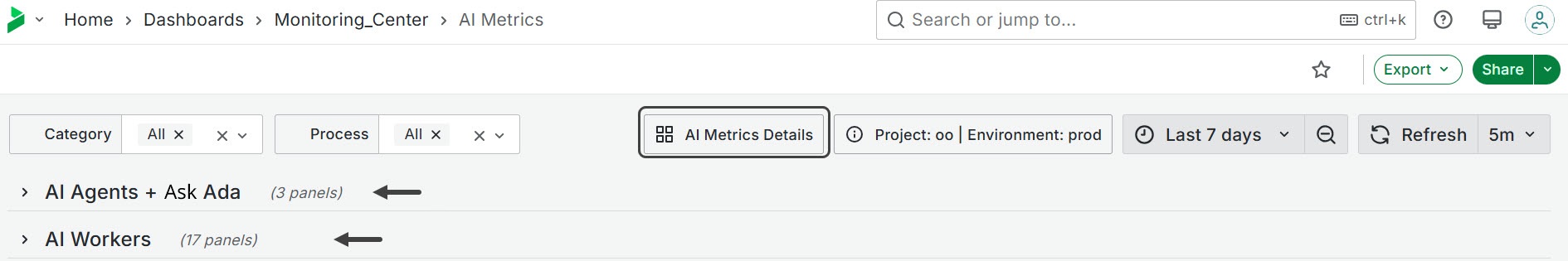

This view is opened upon clicking the AI Metrics in the Monitoring Center Home. The AI Metrics detailed dashboard is organized into two main sections AI Agents + Ask Ada and AI Workers, each corresponding to a process level within different environments. You can use the top ribbon to filter by specific categories and/or processes. Multiple processes can be selected at once.

For a detailed view of AI processes at the task level, click the AI Metrics Details button located in the top ribbon.

AI Agents + Ask Ada

This section contains 3 panels:

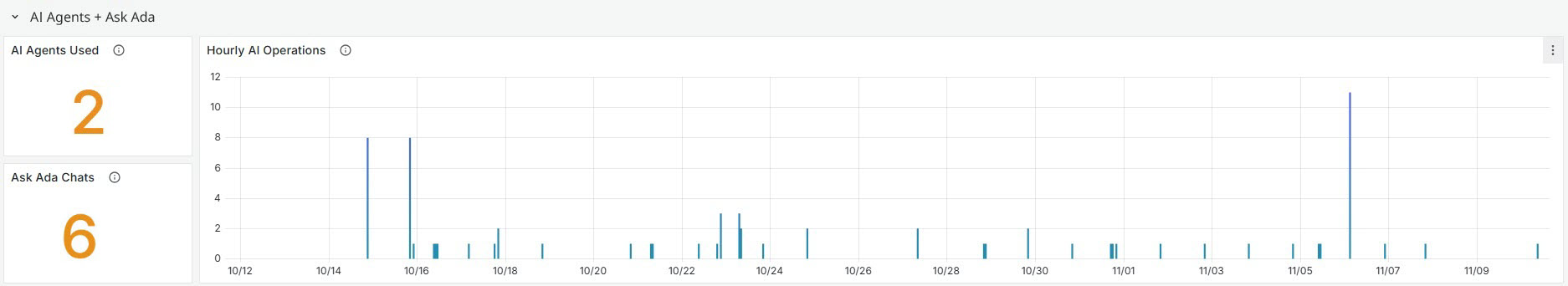

Ask Ada Chats

A chat is defined as the entire session of interactions that a user has with the Ask Ada assistant before the conversation context is lost; The conversation maintains its context as long as the user continues the interaction without leaving the assistant or the application. During a single chat session, a user may ask multiple questions and receive multiple answers, all within the same continuous interaction.

When the end user exits the Ask Ada interface or the application and then returns later, the previous context is considered lost. Upon returning, any new interactions begin a new chat session, which is counted separately in the chart. This widget displays the total number of chat interactions handled by Ask Ada within the specified period. It calculates this metric by filtering distinct chat sessions, providing an overview of user engagement.

AI Agents Used

This widget displays the total number of unique AI agents that have been utilized within the specified timeframe. Each AI agent is counted only once, regardless of how many times it was used during this period.

This metric provides insight into the diversity of AI resources employed over time.

Hourly AI Operations

This widget shows the total number of AI operations conducted on an hourly basis, counting every instance an AI agent is used. Even if the same AI agent is called multiple times, each usage is counted separately.

This metric is useful for monitoring the frequency and distribution of AI activities throughout the day, revealing patterns such as peak operational periods and times of inactivity.

AI Workers

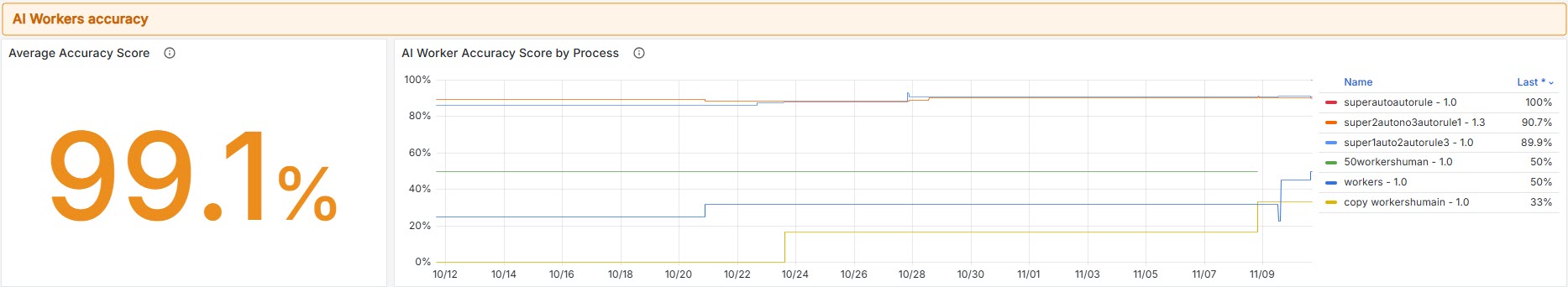

This section displays graphs related to the AI Workers processes at the process level. It is divided into four subsections and 17 panels.

This section presents the accuracy metrics of AI Workers at the process level and showcases the accuracy metric evolution throughout time. It displays both the widget with the % Accuracy Score of Workers shown in the AI Metrics Home view and a graph with AI Worker Accuracy Score by Process in which multiple processes can be selected at once for comparison.

This metric reflects the reliability of tasks powered by AI Workers within each process. It measures how accurately AI Workers complete Form-based tasks by analyzing the extent of human corrections made after the AI Worker has finished its work. The accuracy score is calculated based on the number of manual edits applied to Form fields post-AI Worker completion.

Fewer human corrections indicate higher accuracy, while frequent interventions suggest areas where the AI Worker may need further training. This score provides valuable insights into the performance and training effectiveness of AI Workers. It helps you pinpoint opportunities for optimization, improve automation quality, and build trust in AI-Worker driven processes.

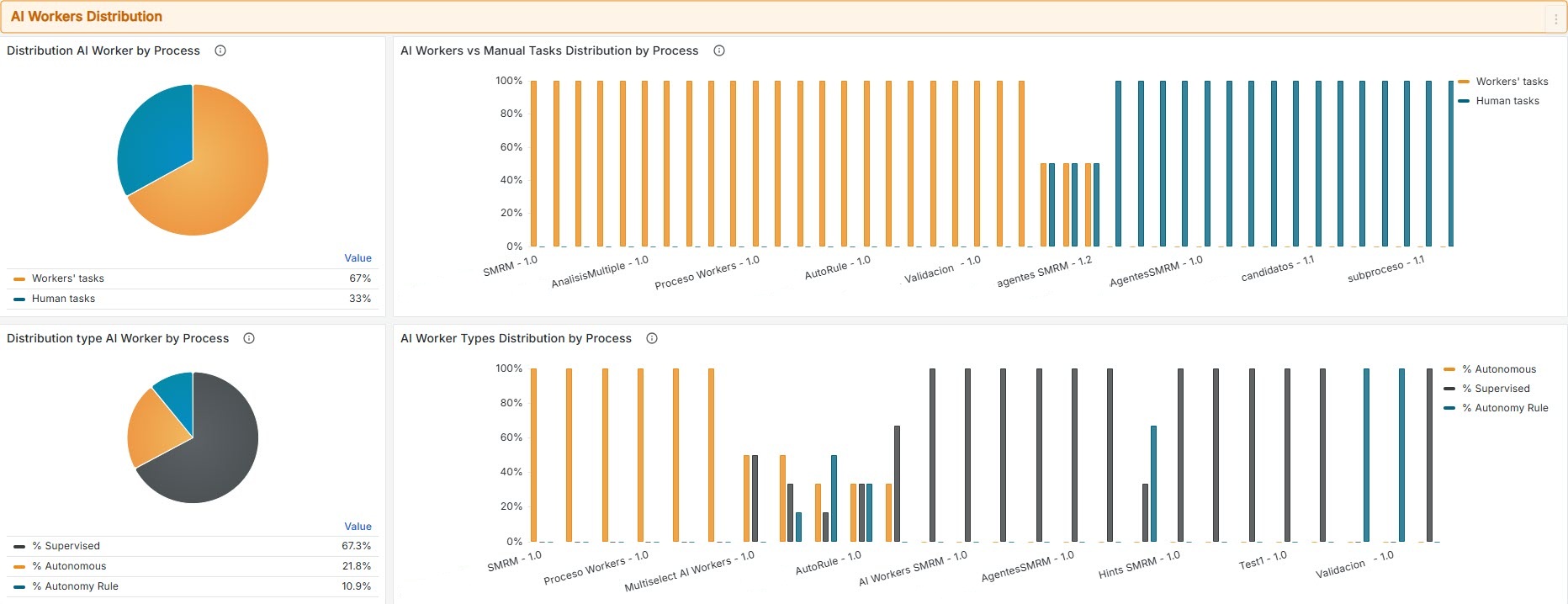

This subsection presents metrics on the distribution of AI Workers at the process level, organized into two main visualizations: AI Workers versus Manual Tasks Distribution by Process and AI Worker Types Distribution by Process.

AI Workers vs. Manual Tasks Distribution by Process

This metric shows the task distribution within each process, comparing tasks with AI Workers enabled and tasks without AI Workers. It is complemented by a Distribution of AI Worker by Process chart, which shows the percentage split between AI Worker-driven tasks and human-executed tasks.

AI Worker Types Distribution by Process

This metric displays the distribution of AI Worker types within each process, categorizing tasks based on their level of automation.

•It is complemented by a Distribution type AI Worker by Process chart, which shows the percentage split between different AI Worker types.

•Supervised: Manual tasks that require human review and intervention.

•Autonomy Rules: Semi-automated tasks that progress based on predefined business rules.

•Autonomous: Fully automated tasks that do not require human intervention.

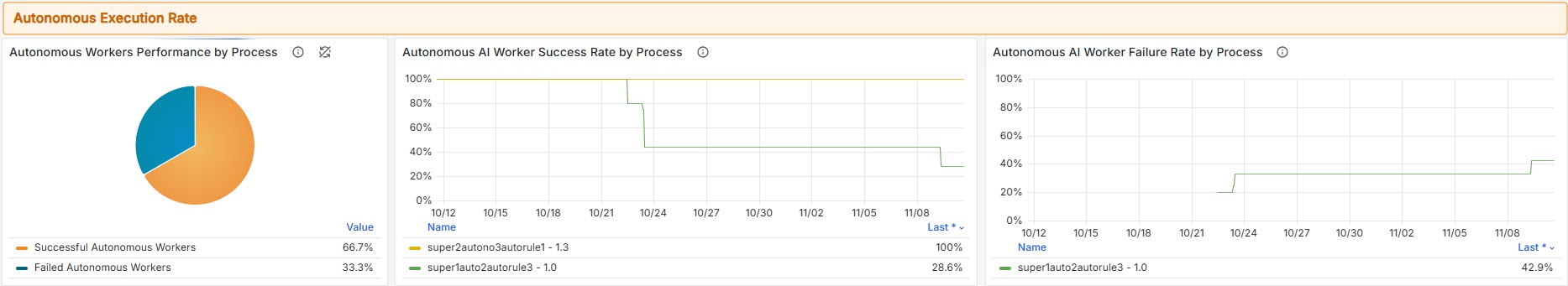

This subsection provides key performance metrics related to the autonomous execution of AI Workers at the process level to help assess its reliability and effectiveness.

Autonomous AI Worker Success Rate by Process

This metric shows the percentage of autonomous tasks within each process that were successfully completed without any human intervention. It serves as a key performance indicator for evaluating the reliability of AI Workers and the overall effectiveness of automation. High success rates suggest that AI Workers are well-trained and capable of independently handling their assigned tasks.

Autonomous AI Worker Failure (Fallback) Rate by Process

This metric indicates the percentage of autonomous tasks that failed to execute automatically and required manual intervention to proceed. Failures may result from unexpected errors or edge cases not covered by the AI Worker’s training or rule set. Monitoring this metric is essential for identifying reliability issues and improving the robustness of AI Workers.

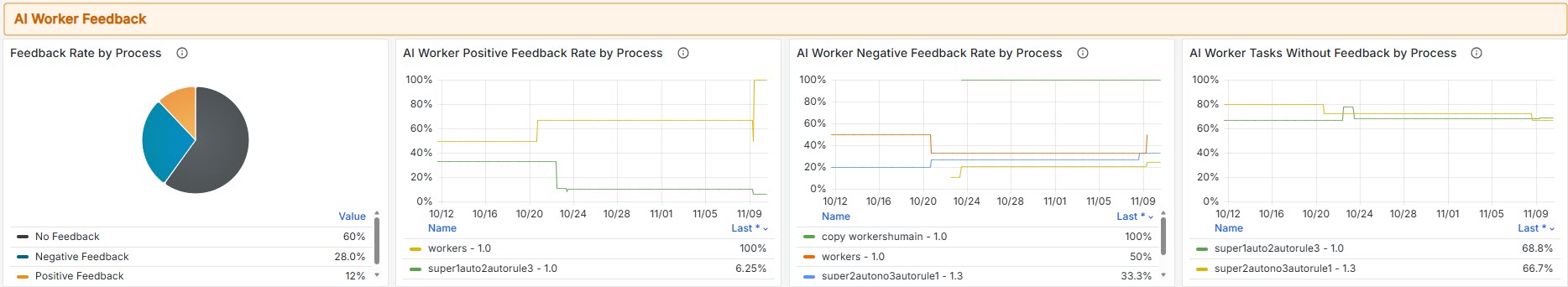

This subsection presents feedback metrics for AI Workers at the process level, offering insights into user satisfaction and performance quality.

AI Worker Positive Feedback Rate by Process

This metric shows the percentage of tasks within each process that received positive feedback ratings. It reflects user satisfaction and the perceived quality of AI Worker performance across different business processes, helping identify the most successful AI Worker implementations.

AI Worker Negative Feedback Rate by Process

This metric shows the percentage of tasks within each process that received negative feedback ratings. It helps identify problematic AI Worker implementations and processes that may require improvement or additional training.

Monitoring negative feedback is essential to maintaining high performance standards and user satisfaction.

AI Worker Tasks Without Feedback by Process

This metric shows the percentage of tasks within each process that did not receive any feedback—neither positive nor negative. High percentages may indicate low user engagement, missing feedback mechanisms, or tasks completed without user interaction. This insight helps uncover opportunities to improve feedback collection and enhance user participation.

|

To access AI Metrics Trends and Forecast (AI- Operations Per Hour) go to the AI Metrics details section. |

Last Updated 11/19/2025 11:06:07 AM