Overview

This dashboard is opened from the AI Metrics Dashboard and shows a detailed view of AI usage metrics at the task level.

AI Agents + Ask Ada

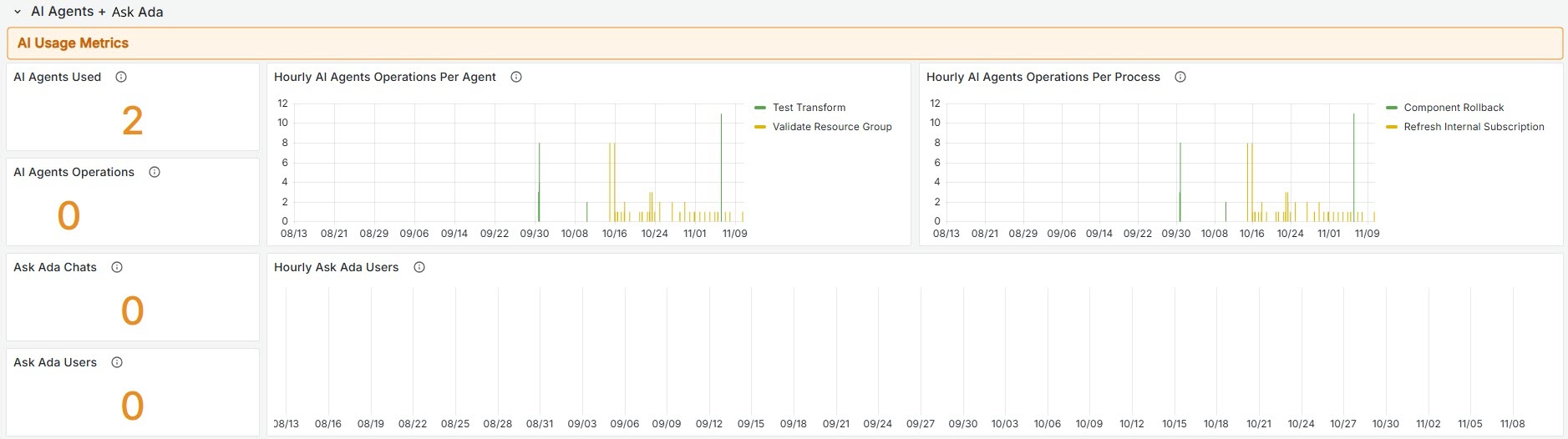

AI Usage Metrics

AI Agents Used

This widget displays the number of unique AI agents currently being used in the project. Think of it as a headcount of distinct AI agents.

AI Agents Operations

This metric tracks the total number of actions or tasks performed by the AI agents. Unlike the "AI Agents Used" metric, this one counts every operation, regardless of whether multiple operations are performed by the same agent.

Hourly AI Agents Operations Per AI Agent

This widget provides a breakdown of the number of operations executed by each AI agent on an hourly basis. The data is aggregated and displayed using the count of operations, summarized per hour for each agent.

Hourly AI Agents Operations Per Process

This widget displays the number of operations conducted by AI agents, categorized by process, on an hourly basis. The data is aggregated and presented as a count of operations per hour for each distinct process.

Ask Ada Chats

This widget displays the number of chats initiated with Ask Ada during the specified time.

Ask Ada Users

This widget shows the number of users interacting with Ask Ada during the specified time.

Hourly Ask Ada Users

This widget tracks the number of unique users interacting with Ask Ada on an hourly basis. The data is aggregated by counting distinct users for each hour.

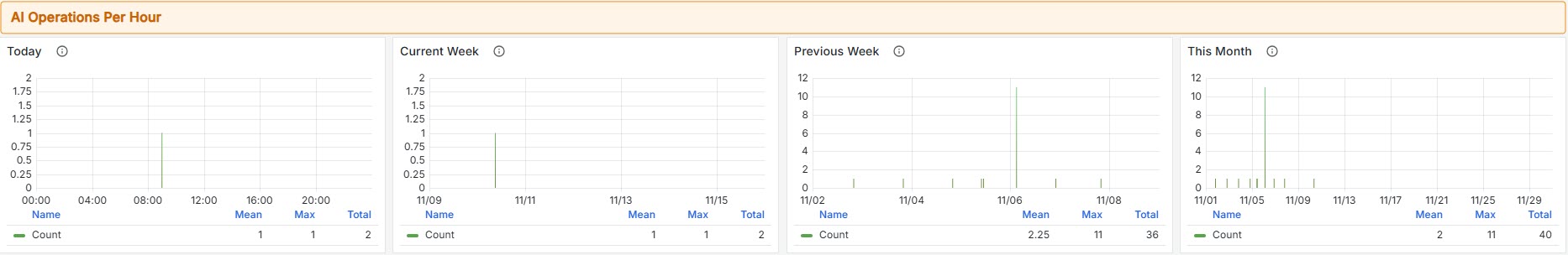

AI Operations per Hour

This panel displays the number of AI operations performed today, in the current week, in the previous week and this month, combining data from AI Agents and Ask Ada interactions, per hour. It also provides summary statistics, including the mean, maximum, and sum for the operations counted each hour.

AI Workers

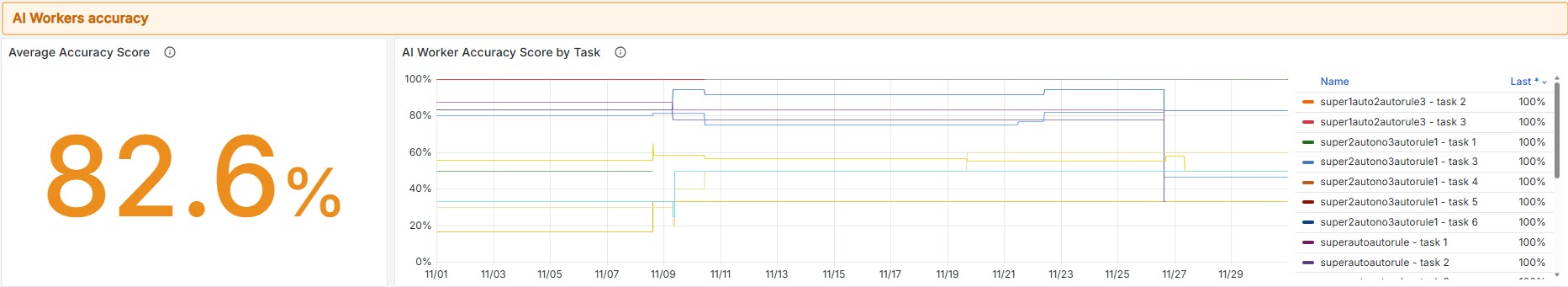

AI Workers Accuracy

This subsection presents the accuracy metric of AI Workers at the task level:

AI Worker Accuracy Score by Task

This metric shows the reliability percentage for each task enabled with AI Workers. The accuracy score is calculated based on human modifications made to Form fields after the AI Worker has completed its task. Tasks requiring fewer human corrections receive higher accuracy scores, while those needing significant intervention score lower. This metric helps assess the effectiveness of AI Worker training at a granular level and identifies specific tasks that may need improvement.

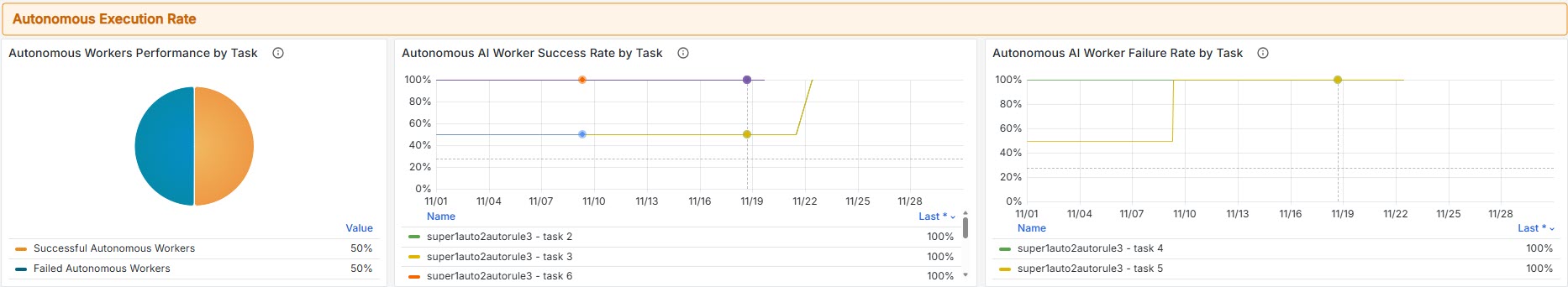

Autonomous Execution Rate

This subsection provides autonomous execution metrics for AI Workers at the task level, offering key performance indicators to evaluate reliability and automation effectiveness in specific tasks:

Autonomous AI Worker Success Rate by Task

Shows the percentage of successful executions for each autonomous task without any human intervention. This is a key performance indicator for assessing the reliability of AI Workers at a granular level. High success rates indicate well-trained and robust AI Workers capable of independently handling their assigned tasks.

Autonomous AI Worker Failure (Fallback) Rate by Task

Displays the percentage of failed executions for each autonomous task that required manual intervention to proceed.Failures may result from unexpected errors or edge cases not covered by the AI Worker’s training or rule set. Monitoring this metric is critical for identifying task-specific reliability issues and improving AI Worker robustness in a targeted manner.

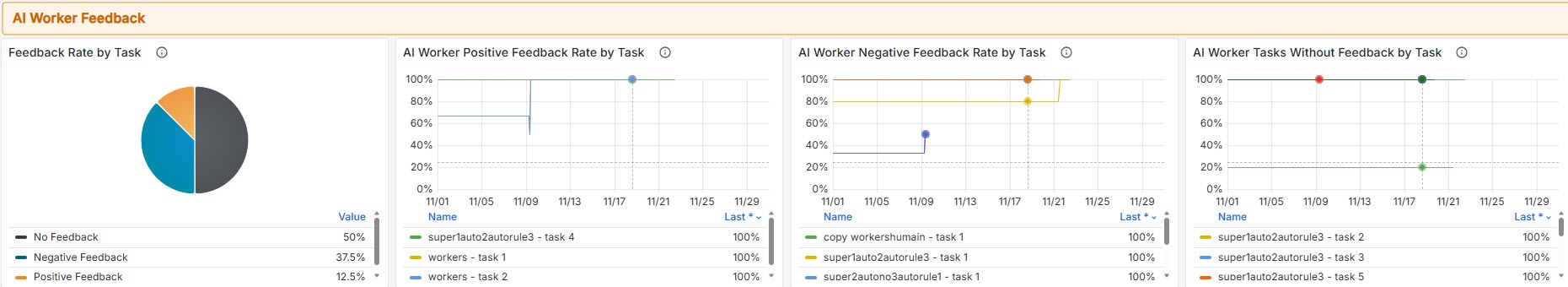

AI Worker Feedback

This subsection presents feedback metrics for AI Workers at the task level, providing indicators of user satisfaction and performance quality for specific tasks:

AI Worker Positive Feedback Rate by Task

Shows the percentage of executions for each task that received positive feedback ratings. This metric reflects user satisfaction and the perceived quality of AI Worker performance at a granular level, helping identify which tasks have the most successful AI Worker implementations.

AI Worker Negative Feedback Rate by Task

Displays the percentage of executions for each task that received negative feedback ratings. This helps identify AI Worker implementations in specific tasks that may require improvement or additional training. Monitoring negative feedback at the task level is essential for maintaining AI Worker quality.

AI Worker Tasks Without Feedback by Task

Shows the percentage of executions for each task that did not receive any feedback—neither positive nor negative. High percentages may indicate low user engagement, missing feedback mechanisms for certain activities, or tasks completed without user interaction. This metric helps identify opportunities to improve feedback collection and enhance user participation at the task level.

Last Updated 11/19/2025 11:07:53 AM