Overview

The Open AI connector for Bizagi is available for download at Bizagi Connector XChange.

Through this connector, you can use different natural language models and artificial intelligence technologies. This connector allows users to create competition responses, image creation, chat bots, edit and moderation features.

|

This Connector was developed according to the contents of the API and the information about it provided by Open AI. Bizagi and its subsidiaries will not provide any kind of guarantee over the content or error caused by calling the API services. Bizagi and its subsidiaries are not responsible for any loss, cost or damage consequence of the calls to Open AI's API. |

Before you start

To test and use this connector, you will need:

1.Bizagi Studio previously installed.

2.This connector previously installed, via the Connectors Xchange as described at https://help.bizagi.com/platform/en/index.html?Connectors_Xchange.htm, or through a manual installation as described at https://help.bizagi.com/platform/en/index.html?connectors_setup.htm

3.Generate your own API Key as described in the Open AI documentation

Configuring the connector

To configure the connector (in particular its authentication parameters), follow the steps presented at the Configuration chapter in https://help.bizagi.com/platform/en/index.html?connectors_setup.htm

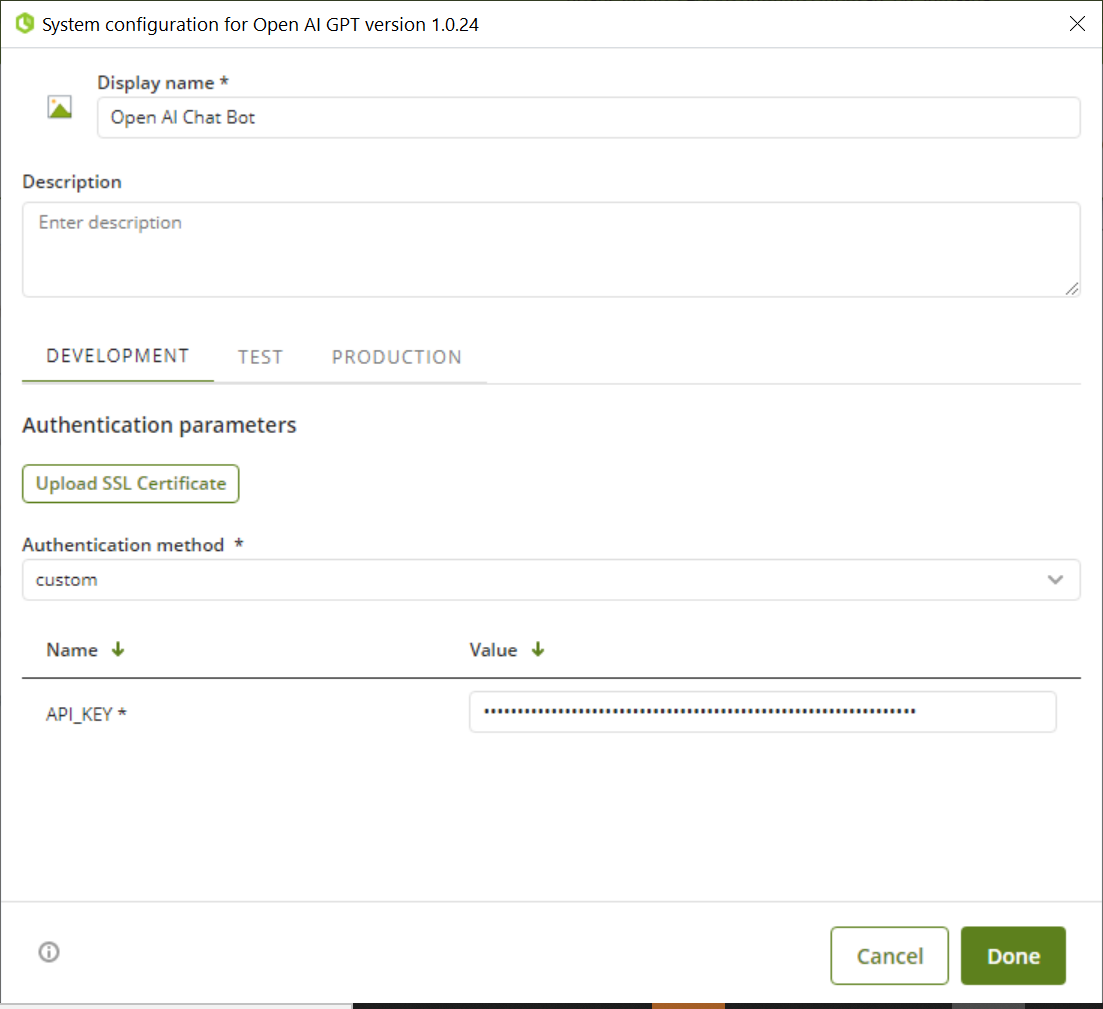

For this configuration, consider the following authentication parameters:

•API_KEY: Open AI key.

The configuration of the Connector should look like the following:

Using the connector

This connector features a set of methods which let you use Open AI's API services to take advantage of its capabilities.

To learn overall how/where to configure the use of a connector, refer to https://help.bizagi.com/platform/en/index.html?Connectors_Studio.htm.

Available Actions

Create chat

By using this feature, users can create a Chat bot powered by any of the available Open AI NLP models.

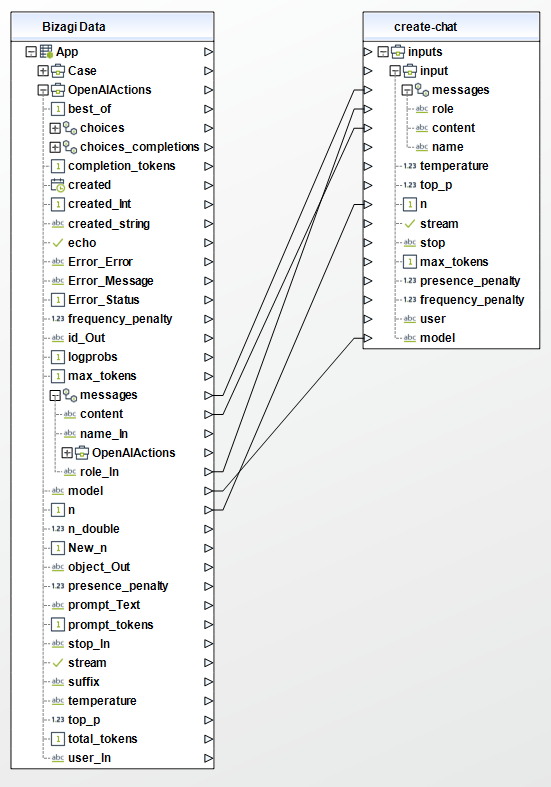

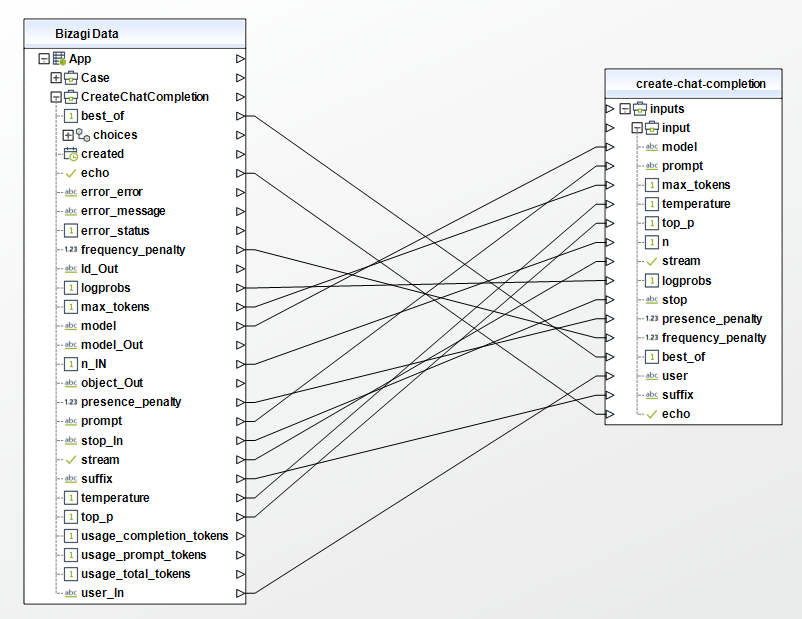

To configure its inputs, take into account the following descriptions:

•model (string - Required): ID of the model to use (gpt-4, gpt-4-0314, gpt-4-32k, gpt-4-32k-0314, gpt-3.5-turbo, gpt-3.5-turbo-0301). See the model endpoint compatibility table for details on which models work with the Chat API.

•messages (array - Required): A list of messages describing the conversation so far.

•role (string - Required): The role of the author of this message. One of system, user, or assistant.

•content (string - Required): The contents of the message.

Further inputs may be used, for more information visit the Open AI Create chat competion documentation.

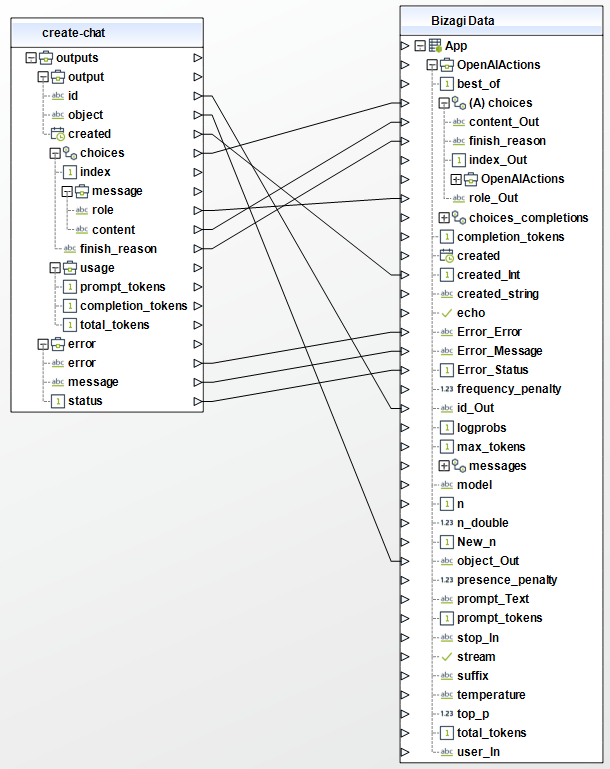

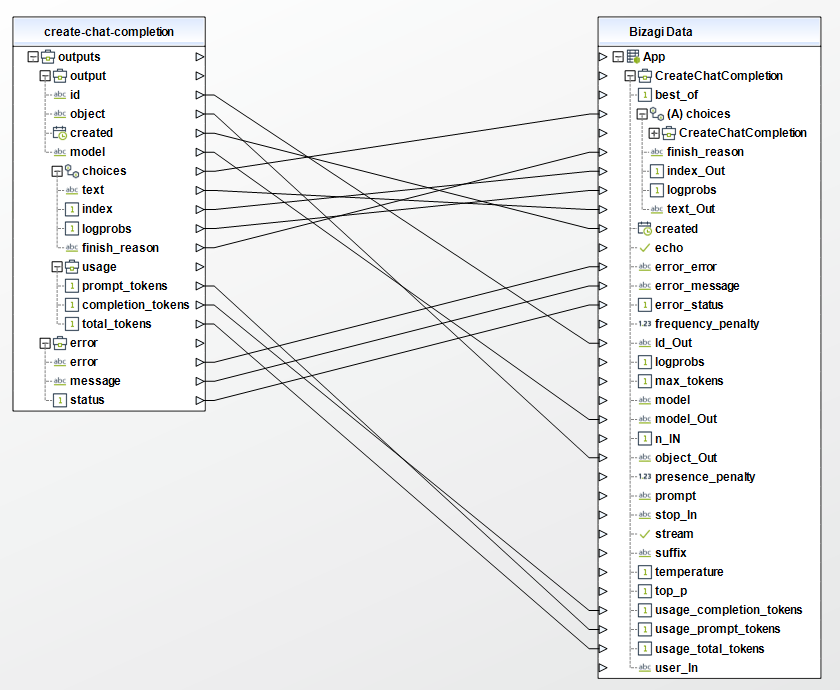

To configure the outputs of this action, you can map the output object to the corresponding entity in Bizagi and make sure you map the attributes of the entity appropriately.

The outputs of this connector are the following:

•id (string): Output identifier.

•object (string): Indicates the type of object returned, in this case, it will be "chat".

•created (date): Answer's creation date.

•choices (object - array):

oindex (integer): Number to identify the current item answered.

omessage (object):

▪role (string): Role of the generated message. It can be "system", "user", or "assistant". The "system" role is used for system-generated messages that provide instructions or additional information.

▪content (string): Answer to the question from inputs.

ofinish_reason (string): Provides information about why the model has finished generating a response. It can be "stop" if the model has completed normally, or "max_tokens" if the token limit has been reached

•usage (object):

oprompt_tokens (integer): Tokens spent for the answer.

ocompletion_tokens (integer): Tokens spent for completion.

ototal_tokens (integer): Total tokens spent.

Create image

By using this feature, the connector gives a certain amount of images that are related to an user input text.

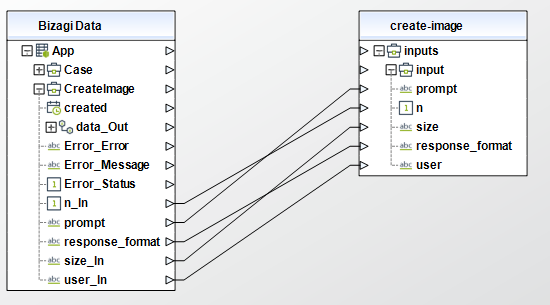

To configure its inputs, take into account the following descriptions:

•prompt (string - Required): A text description of the desired image(s). The maximum length is 1000 characters.

•n (integer - optional): The number of images to be generated. Must be between 1 and 10.

•size (string - optional): The size of the generated images. Must be one of 256x256, 512x512, or 1024x1024.

•response_format (string - optional): The format in which the generated images are returned. Must be one of url or b64_json.

•user (string - optional): A unique identifier representing your end-user, which can help OpenAI to monitor and detect abuse.

For more information visit the Open AI Create image documentation.

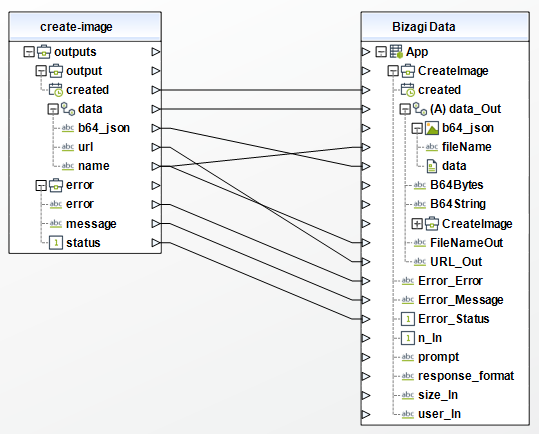

To configure the outputs of this action, you can map the output object to the corresponding entity in Bizagi and make sure you map the attributes of the entity appropriately.

The outputs of this connector are the following:

•created (date): Answer's creation date.

•data (object - array):

ourl (string): URL where the image can be downloaded.

ob64_json (string): base64 corresponding to the image.

oname (string): Name of the generated image(s). It is built with the following format: file_ + date now + .png.

Create completion

With completion, given an user input, the model will return one or more predicted completions, and can also return the probabilities of alternative tokens at each position.

To configure its inputs, take into account the following descriptions:

•model (string - Required): ID of the model to use (text-davinci-003, text-davinci-002, text-curie-001, text-babbage-001, text-ada-001). You can use the List models API to see all of your available models, or see the Model overview for descriptions of them.

•prompt (string - optional): The prompt(s) to generate completions for, encoded as a string. Note that <|endoftext|> is the document separator that the model sees during training, so if a prompt is not specified the model will generate as if from the beginning of a new document.

•temperature (float - optional): What sampling temperature to use, between 0 and 2. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic.

•top_p (float - optional): An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered.

•stream (stream - optional): Whether to stream back partial progress. If set, tokens will be sent as data-only server-sent events as they become available, with the stream terminated by a data: [DONE] message.

•n (integer - optional): How many completions to generate for each prompt.

Further inputs may be used, for more information visit the Open AI Create completion documentation.

To configure the outputs of this action, you can map the output object to the corresponding entity in Bizagi and make sure you map the attributes of the entity appropriately.

The outputs of this connector are the following:

•id (string): Output identifier .

•object (string): Indicates the type of object returned, in this case, it will be "text_completion".

•created (date): Answer's creation date.

•model (string): Model used for the input.

•choices (object - array):

oindex (integer): Number to identify the current item answered.

otext (string): Answer from question in inputs

ologprobs (integer): Additional information about the probability distribution of the generated tokens. for example, null, indicates that the logprobs are not available.

ofinish_reason (string): Provides information about why the model has finished generating a response. It can be "stop" if the model has completed normally, or "max_tokens" if the token limit has been reached.

•usage (object):

oprompt_tokens (integer): Tokens spent in the answer.

ocompletion_tokens (integer): Tokens spent in completion.

ototal_tokens (integer): Total tokens spent.

Create edit

Edit allows you to modify the user input based on a specific prompt. As an example you can ask the connector to correct spelling of the user input.

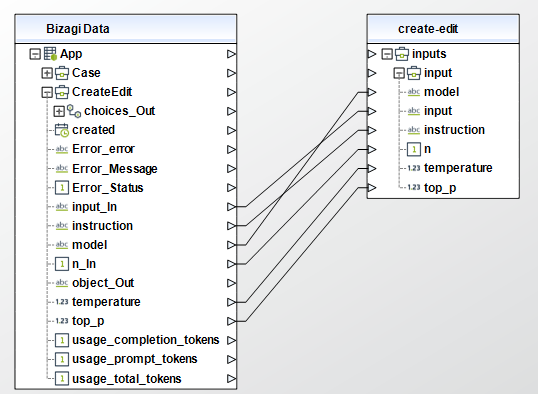

To configure its inputs, take into account the following descriptions:

•model (string - Required): ID of the model to use (text-davinci-edit-001, code-davinci-edit-001). You can use the text-davinci-edit-001 or code-davinci-edit-001 model with this endpoint.

•input (string - optional): The input text to use as a starting point for the edit.

•instruction (string - Required): The suffix that comes after a completion of inserted text.

•temperature (float - optional): What sampling temperature to use, between 0 and 2. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic.

•top_p (float - optional): An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered.

•n (integer - optional): How many edits to generate for the input and instruction.

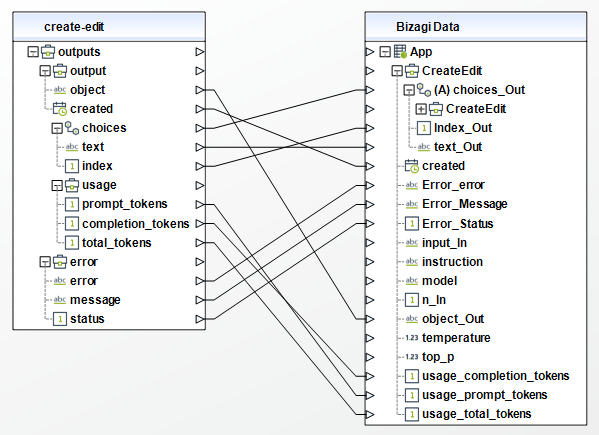

To configure the outputs of this action, you can map the output object to the corresponding entity in Bizagi and make sure you map the attributes of the entity appropriately.

The outputs of this connector are the following:

•object (string): Indicates the type of object returned, in this case, it will be "edit".

•created (date): Answer's creation date.

•choices (object - array):

oindex (integer): Number to identify the current item answered.

otext (string): Answer to question from inputs

•usage (object):

oprompt_tokens (integer): Tokens spent in the answer.

ocompletion_tokens (integer): Tokens spent in completion.

ototal_tokens (integer): Total tokens spent.

Create moderation

Determines if the user input whether belongs to one of the restricted categories or not. Those categories are shows below.

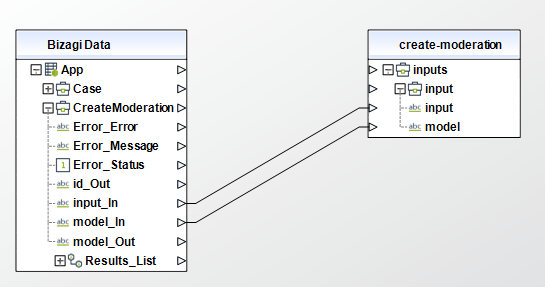

To configure its inputs, take into account the following descriptions:

•input (string - Required): The input text to classify.

•model (string - optional): Two content moderations models are available: text-moderation-stable and text-moderation-latest.

To configure the outputs of this action, you can map the output object to the corresponding entity in Bizagi and make sure you map the attributes of the entity appropriately.

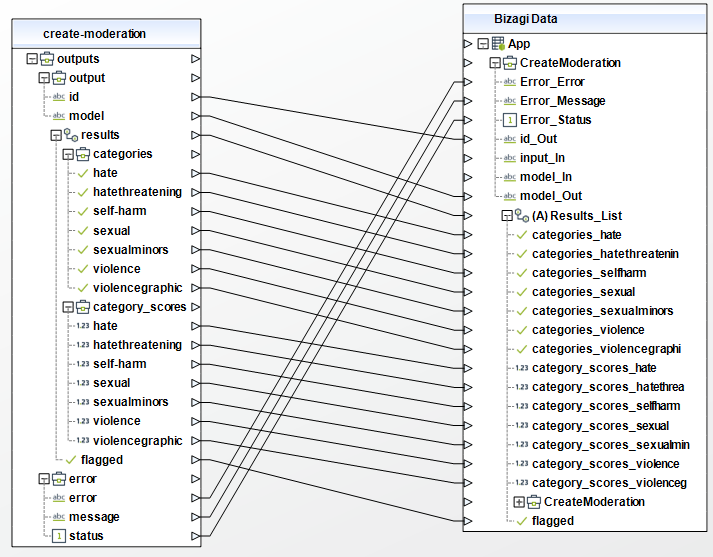

The outputs of this connector are the following:

•id (string): Output identifier.

•model (string): Model used.

•results (object - array): Results as boolean or float score.

ocategories (object): The boolean values are held in this list determining if the user input belongs to each restricted category. Those categories are (hate, hatethreatening, self-harm, sexual, sexualminors, violence, violencegraphic).

ocategory_scores (object): The float values are held in this list determining the matching probabilitie for each restricted category. Those categories are (hate, hatethreatening, self-harm, sexual, sexualminors, violence, violencegraphic).

oflagged (boolean): asdasd

Last Updated 6/20/2023 9:54:39 AM