Overview

The MS Computer vision connector for Bizagi is available for download at Bizagi Connectors Xchange.

Through this connector, you will be able to connect your Bizagi processes to a https://www.microsoft.com/cognitive-services/ account in order to use the cognitive services (AI) API.

For more information about this connector's capabilities, visit Bizagi Connectors Xchange.

Before you start

In order to test and use this connector, you will need:

1.Bizagi Studio previously installed.

2.This connector previously installed, via the Connectors Xchange as described at https://help.bizagi.com/platform/en/index.html?Connectors_Xchange.htm, or through a manual installation as described at https://help.bizagi.com/platform/en/index.html?connectors_setup.htm

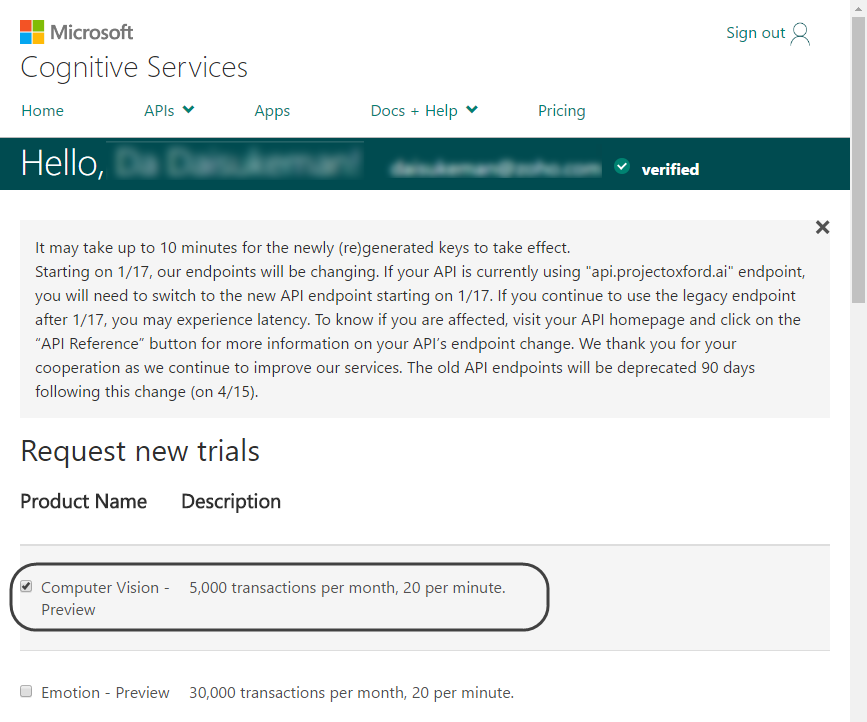

3.An account at https://www.microsoft.com/cognitive-services/, having access to the Computer vision API (trial or enterprise subscription).

Configuring the connector

In order to configure the connector (i.e its authentication parameters), follow the steps presented at the Configuration chapter in https://help.bizagi.com/platform/en/index.html?connectors_setup.htm

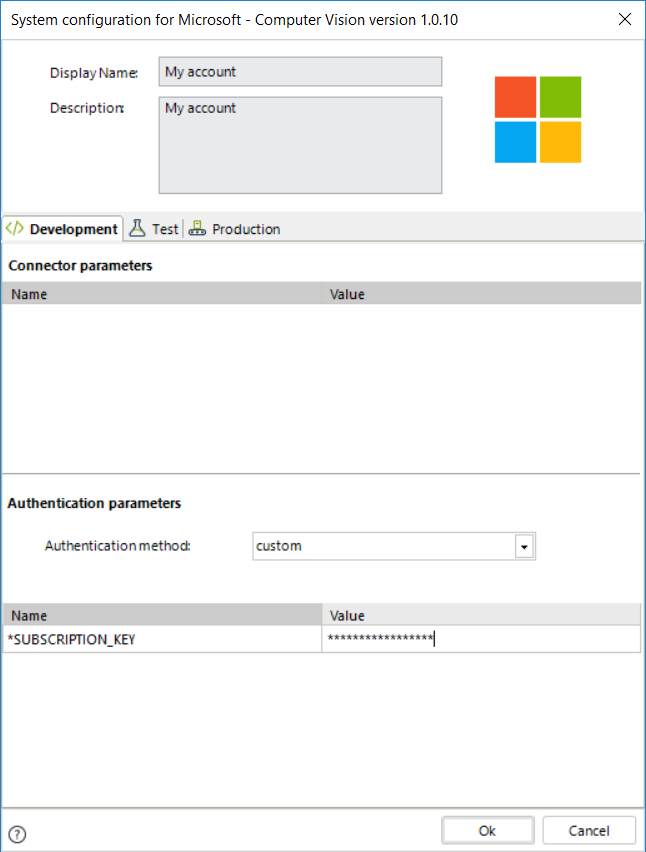

For this configuration, consider the following authentication parameters:

•Authentication method: custom.

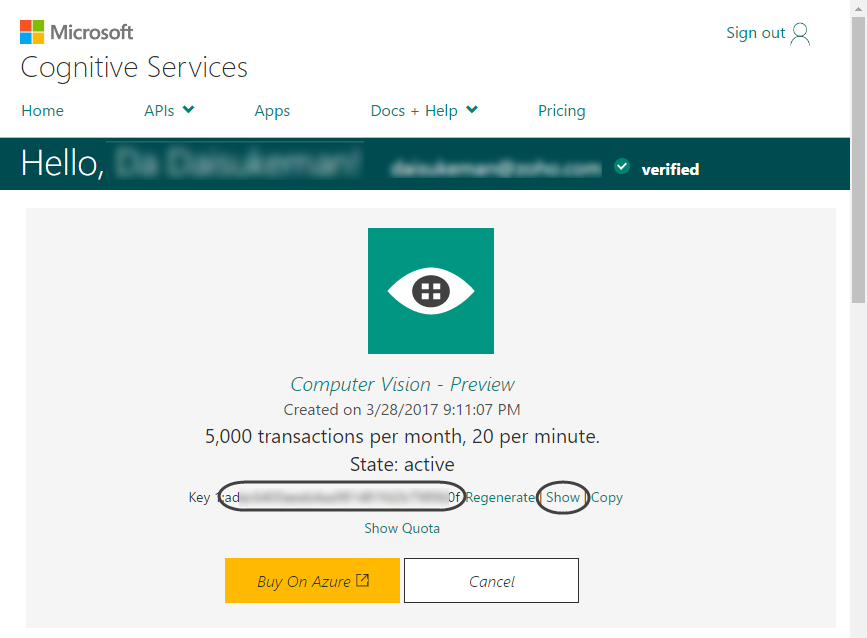

•Subscription key: Use the text key provided to you when enabling the Computer Vision API.

You may use click the Show option to view it:

Using the connector

This connector features an available method of Microsoft cognitive services oriented to machine learning: to have artificial intelligence interpret any photo by responding back with tags, categories and words overall which are related to the image.

To learn overall how/where to configure the use of a connector, refer to https://help.bizagi.com/platform/en/index.html?Connectors_Studio.htm.

When using the connector, make sure you consider the following details for two available method.

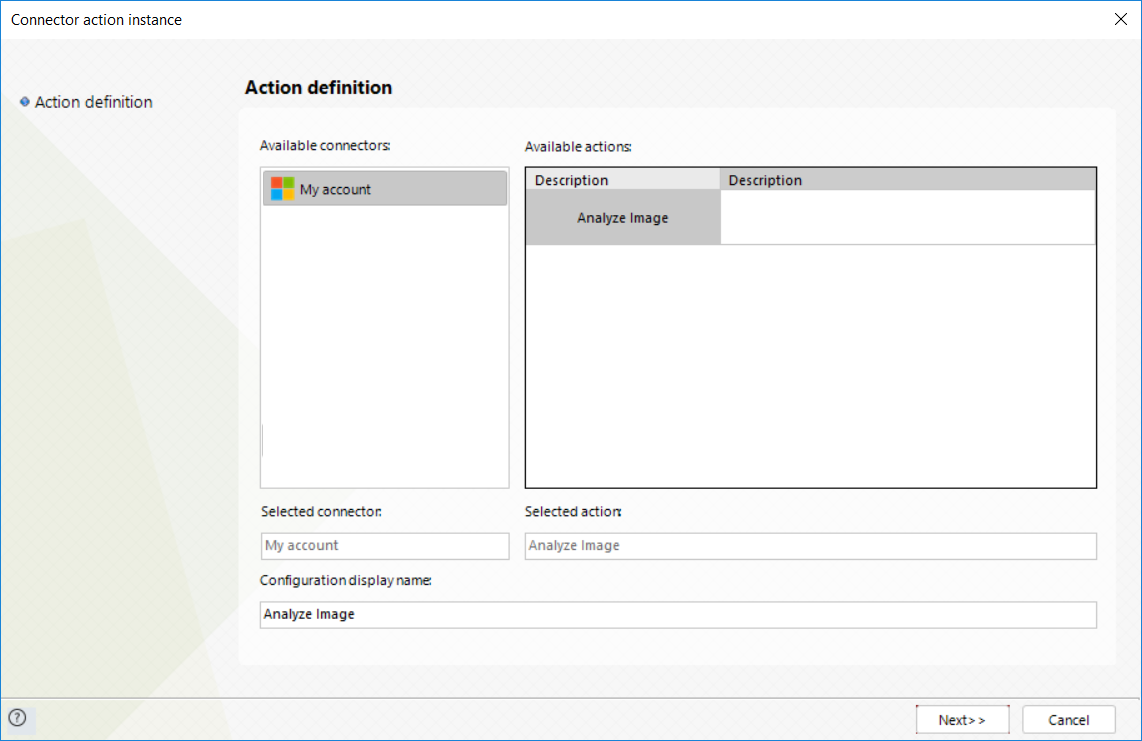

Analyze image

This method lets you send an image so that the service interprets what is the image about -or what it contains.

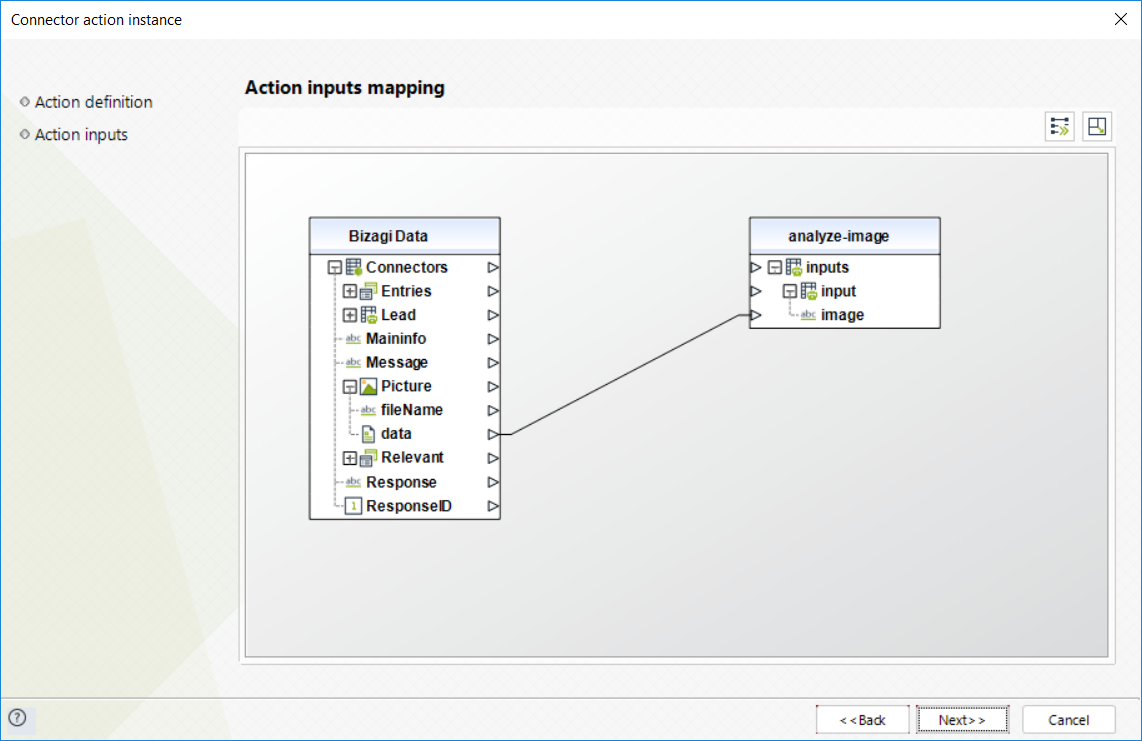

To configure its inputs, consider:

•image: Map the data field of either an Image attribute (recommended) or File attribute (as long as it has an image in it) in Bizagi.

Bizagi automatically sends the array of bytes representing the image, as expected by the service.

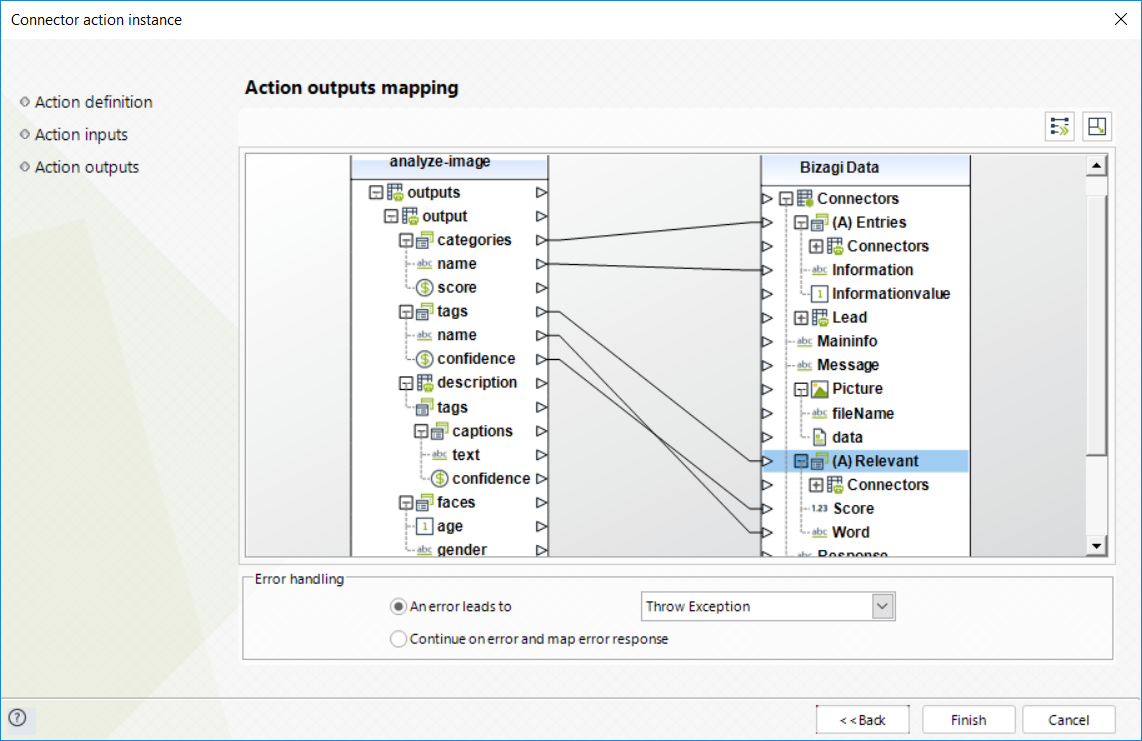

To configure its outputs when getting started and testing, you may map:

•categories: Map this into a collection in your data model.

This way and by mapping as well its inner name and score elements, you can store the list of relevant categories that the cognitive services find identifiable for the image (highest scores mean more relevance).

•tags (related): Map this into a collection in your data model.

This way and by mapping as well its inner name and confidence elements, you can store the list of relevant tags (words) that the cognitive services find applicable in the image, with a certain degree of confidence (the higher the confidence, the more certainty the service has in recognizing such elements labeled by the words).

•tags (descriptive): Map this into a collection in your data model.

This way and by mapping as well its inner text and confidence elements, you can store the list of descriptive words that the cognitive services uses to describe the image (with a certain degree of confidence where the higher the confidence, the more certainty the service is).

•faces: Map this into a collection in your data model.

This way and by mapping as well its inner age and gender elements, you can store the information relevant to faces (i.e people) in the image.

For more information about this method's use, refer to Microsoft's official documentation at https://www.microsoft.com/cognitive-services/en-us/Computer-Vision-API/documentation.

Last Updated 10/27/2022 11:24:08 AM